Progressive Motion Representation Distillation With Two-Branch Networks for Egocentric Activity Recognition

Image credit: Zhouyang

Image credit: Zhouyang

Abstract

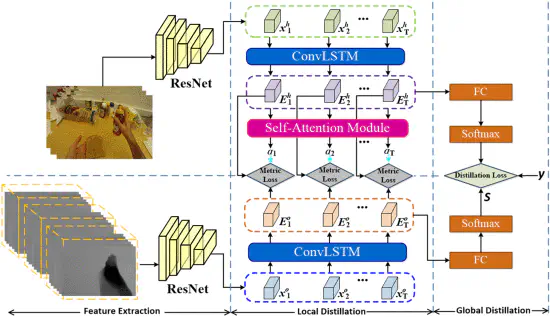

Video-based egocentric activity recognition involves fine-grained spatio-temporal human-object interactions. State-of-the-art methods, based on the two-branch-based architecture, rely on pre-calculated optical flows to provide motion information. However, this two-stage strategy is computationally intensive, storage demanding, and not task-oriented, which hampers it from being deployed in real-world applications. Albeit there have been numerous attempts to explore other motion representations to replace optical flows, most of the methods were designed for third-person activities, without capturing fine-grained cues. To tackle these issues, in this letter, we propose a progressive motion representation distillation (PMRD) method, based on two-branch networks, for egocentric activity recognition. We exploit a generalized knowledge distillation framework to train a hallucination network, which receives RGB frames as input and produces motion cues guided by the optical-flow network. Specifically, we propose a progressive metric loss, which aims to distill local fine-grained motion patterns in terms of each temporal progress level. To further enforce the proposed distillation framework to concentrate on those informative frames, we integrate a temporal attention mechanism into the metric loss. Moreover, a multi-stage training procedure is employed for the efficient learning of the hallucination network. Experimental results on three egocentric activity benchmarks demonstrate the state-of-the-art performance of the proposed method.