Image credit: Zhouyang

Image credit: Zhouyang

Abstract

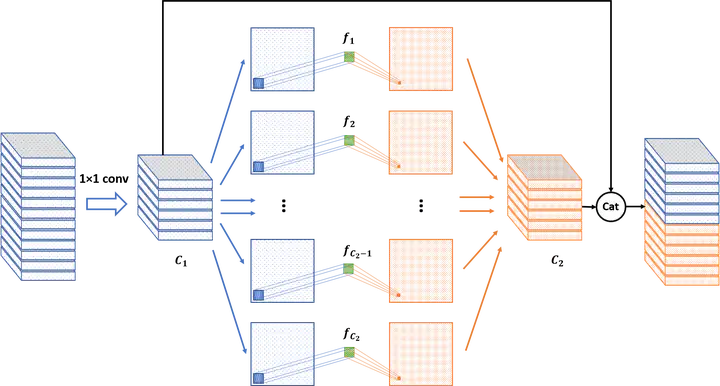

How to handle an arbitrary number for input images is a fundamental problem of learning-based photometric stereo methods. Existing approaches adopt max-pooling or observation map to fuse an arbitrary number of extracted features. However, these methods discard a large amount of the features from the input images, impacting the utilization and accuracy, or ignore the constraints from the intra-image spatial domain. In this paper, we explore how to efficiently fuse features from a variable number of input images. First, we propose a bilateral extraction module, which categorizes features into positive and negative, to maximally keep the useful feature in the fusion stage. Second, we adopt a top-k pooling to both the bilateral information, which selects the k maximum response value from all features. These two modules proposed are “plug-and-play” and can be used in different fusion tasks. We further propose a hierarchical photometric stereo network, namely HPS-Net, to handle bilateral extraction and top-k pooling for multiscale features. Experiments in the widely used benchmark illustrate the improvement of our proposed framework in the conventional max-pooling method and the proposed HPS-Net outperforms existing learning-based photometric stereo methods.